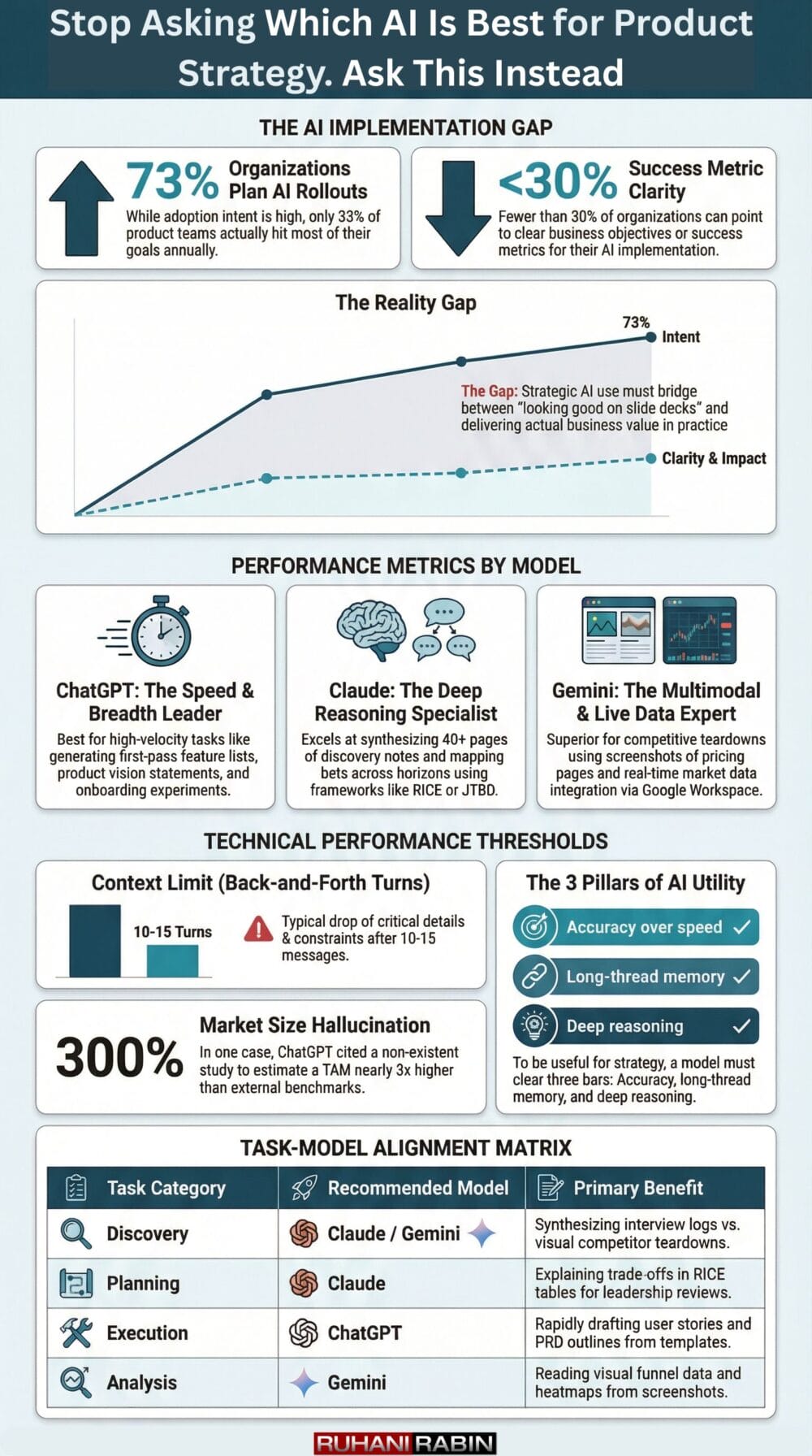

Only about a third of product teams hit most of their goals in a given year, depending on which survey you read. At the same time, about 73% of organizations say they plan to roll out AI across products and internal work, yet fewer than 30% can point to clear business objectives or success metrics—a gap that research on using Artificial Intelligence (AI) in developing marketing strategies highlights as a critical barrier to implementation success. The numbers look good on slide decks and awful in practice. That gap is where AI for product strategy either becomes a force multiplier or just another line item in the budget.

Product founders, CPOs, and WordPress or SaaS builders feel pressure from boards and teams to pick a side in the ChatGPT vs. Claude vs. Gemini debate. People ask which AI is best, which one writes a better product roadmap, or which one helps most with product management. After more than twenty-five years building products and applying machine learning in real companies, Ruhani Rabin has a blunt answer: the model is rarely the problem. The match between the tool and the job is what matters.

This article skips the big talk and marketing claims. It breaks down how ChatGPT, Claude, and Gemini actually behave when used as AI tools for product managers and founders who care about shipping and retention. You will see where each model shines, where it fails, how they compare across discovery, planning, execution, and analysis, and how Ruhani uses them inside real consulting work. By the end, the question shifts from which AI is best to which AI fits a specific product strategy task in front of you.

I have made a PDF on this, get that PDF at the end of this article.

What Makes AI Useful for Product Strategy (And What Doesn’t)

Before picking a model, it helps to spell out what real product strategy work looks like. For a SaaS or WordPress product, that work spans discovery, planning, execution, and analysis. Discovery covers research and competitive review, planning covers roadmaps and prioritization, execution covers user stories and specs, and analysis covers feedback and metrics.

When you use AI for product strategy, you are making bets that move revenue, churn, and runway. Any tool has to clear three basic bars if it wants a seat at your table:

- Accuracy matters more than how fast words appear on screen. If an AI gives you a wrong retention driver or fake market number, fast output just means you ship the wrong thing sooner. You can live with a slightly slower tool that handles simple facts and math reliably once you spot-check it.

- Long memory across a thread is vital for strategy work. You want the model to remember earlier discovery notes, business goals, and trade-offs instead of dropping them mid-session. Short memory forces you to repeat context, which leads to shallow advice and lots of copy-paste.

- Deep reasoning separates a research sidekick from a true strategy helper. Surface summaries are easy; weighing trade-offs and stress-testing assumptions is harder. When you push on edge cases or ask it to apply a framework, the better models explain why a choice makes sense, not just what you might do.

As Marty Cagan writes in Inspired, “The job of product management is to discover a product that is valuable, usable, and feasible.” AI can support that work, but it cannot define value or feasibility on its own.

Even the best AI for product strategy will never attend a user call or sit in on a tense board review. It cannot replace the empathy that comes from watching people fail in your onboarding or the pattern sense that senior leaders build over years. You still have to judge bias, privacy risk, and impact on your team.

Many teams mix up adding AI automation features to their product with using AI inside strategy work. One is about shipping an AI-powered feature that end users touch, the other is about using models as thinking partners behind the scenes. No model wins every type of work, so the useful question is which strengths map to which tasks.

ChatGPT for Product Strategy: Speed and Breadth

For most teams, ChatGPT is the first stop when they try AI for product strategy. It is easy to access, has a large community, and feels fast enough to use in daily product meetings. If someone asks for a ChatGPT vs. Claude vs. Gemini comparison, they often mean ChatGPT vs. the rest because it set the standard for what people expect from this kind of tool.

Where ChatGPT Excels

ChatGPT shines when you need speed and variety. For brainstorming, it can throw out dozens of feature ideas, messaging angles, or onboarding experiments in a few minutes. If you work on a product roadmap, it can list options based on your goals and constraints, and then you pick and refine what makes sense. This makes it handy for breaking blank-page syndrome without dragging an entire team into a workshop.

Common ways product teams use ChatGPT include:

- Generating first-pass feature idea lists

- Drafting product vision statements and positioning options

- Producing alternative onboarding flows to compare

- Suggesting experiments and A/B test concepts

It also does strong work as a structuring tool. Paste a handful of customer interview notes or support tickets and ask it to cluster pain points, and you get a first pass that points you in the right direction. From there it can draft user stories, acceptance criteria, or PRD outlines that match the format you already use. Many product owners treat these drafts as rough clay that they reshape rather than text they send straight to Jira.

When you are new to a space, ChatGPT helps you ramp up faster than a raw search engine. It can summarize common positioning patterns, competitor categories, and high-level trends so you do not miss the basics. A simple prompt that describes your target user can produce ten value proposition variations in under two minutes, which you can later test with your team or customers. GPT-4 handles this multi-step reasoning better than GPT-3.5, though both start to lose context after eight to ten back-and-forth messages.

Where ChatGPT Falls Short

The same skills that make ChatGPT feel smooth also make it risky for strategy. It will happily invent market size numbers, market research reports, or customer quotes that sound real but are not grounded in any source. Because it was trained on broad internet text, a lot of its advice reads like an average blog post, not a sharp point of view that wins in a tight B2B category. If you paste this kind of output directly into decks, it can give stakeholders a false sense of certainty.

Context limits also show up fast in deeper work. After ten to fifteen turns in a complex conversation, it starts dropping important details about your product, segments, or constraints, so you spend time reminding it of facts you already shared. It also struggles with nuanced trade-offs such as choosing between paying down technical debt and chasing a big logo when you only give it vague inputs. In one real case, a founder asked it to size a niche B2B SaaS market, and it cited a study that did not exist and a total addressable market nearly three times higher than any external benchmark.

You can still use ChatGPT for that kind of task, but only as a rough starting point. Think of it as a fast junior analyst who writes quickly but needs a senior person to check every number. Treated that way, it is powerful for exploration and drafting. Treated as a decision engine, it can steer your product strategy in the wrong direction.

Claude for Product Strategy: Depth and Reasoning

When the question shifts from speed to depth, Claude enters the conversation. Product leaders who care about long research packs, multi-page PRDs, or heavy discovery notes tend to prefer it. In many ChatGPT vs. Claude for product management debates, Claude wins on reasoning, while ChatGPT wins on raw pace and the large plugin and prompt community around it.

Where Claude Excels

Claude stands out when you want to work with large amounts of text in one place. You can drop in dozens of pages of discovery interviews, support transcripts, or strategy docs and still keep a single thread. Instead of chopping content into pieces and stitching answers by hand, you can ask it to look across the whole set and keep track of cross-cutting themes. That long-context behavior feels closer to how a human strategist reads and synthesizes.

Its reasoning also tends to be more deliberate. When you push it to compare options, challenge its ideas, or apply a framework such as Jobs To Be Done or RICE, it often explains its steps and admits uncertainty. That makes it well-suited to trade-off analysis, like balancing UX friction points against feature breadth or mapping bets across horizons. You can ask it to list edge cases and failure modes, and it will usually surface cases that many teams forget.

Claude is particularly strong for document analysis. You can feed it a forty-page research deck or a full product strategy and ask it to extract the three biggest insights, highlight gaps, and suggest follow-up research. In one consulting project, Ruhani used it to scan forty pages of interview notes and it pulled out three recurring jobs, backed them with exact quotes, and matched them to missing features. That saved several hours compared with manual reading while still leaving room for human review.

Where Claude Falls Short

That depth comes with trade-offs. Claude often feels slower than ChatGPT for simple questions, especially on the highest-tier model. If you just want ten subject line ideas or a quick competitor list, waiting for a long, careful answer can feel like overkill. In those cases, its main strength turns into friction.

It can also feel more cautious in creative work. When you ask for bold bets, unusual experiments, or strange marketing angles, it tends to stay closer to safe, middle-of-the-road ideas. That can be helpful when you want grounded plans but limiting when you need one or two wild options to stress-test with your team. Many people pair it with ChatGPT for that reason, using each for a different flavor of idea.

Claude still trails ChatGPT and Gemini in workflow automation software. There are fewer plugins, fewer native hooks into tools like analytics or CRMs, and less community content on clever product prompts. On top of that, the Opus tier costs more per token than many teams expect, which adds up if you run heavy document analysis each week. For deep strategy reviews the value is clear, but for everyday tasks you may not need that level of spend.

Gemini for Product Strategy: Integration and Multimodal Analysis

Gemini sits in a different place from ChatGPT and Claude because Google built it to sit close to search and Workspace tools. That makes it interesting for product strategy work that leans on live data, analytics, and visual assets. While it still lags in some reasoning tasks, its integration story fills gaps that the other models cannot touch yet.

Where Gemini Excels

The tight link with Google products is a clear strength. Gemini can read Docs, Sheets, and Slides in the same breath as it queries the web, which means you avoid constant exporting and copy-paste work. When you want to review funnel data from Analytics next to a pitch deck, it can look at both and comment on patterns. That kind of cross-tool view is handy for CPOs and founders who live inside Google Workspace all day.

Gemini also handles multimodal input well. You can upload screenshots of competitor pricing pages, onboarding flows, or dashboards and ask it to explain differences and themes. In practice, that means you do not have to retype feature grids or price points; the model pulls them out of the images. For product strategy, this makes competitive teardown work faster and less boring.

Because it can look up fresh information on the web, it works better than models with hard knowledge cutoffs for news-driven research. You can ask about very recent product releases, pricing changes, or market events and get more current context. For many teams, the free Gemini tier already covers this kind of research, and only very heavy users need the paid Advanced plan. From a cost standpoint, that makes it an easy piece of a broader AI stack.

Where Gemini Falls Short

Gemini still trails Claude when you push it into dense strategic reasoning. Its answers often read like summaries of what is in the data rather than sharp opinions on what you should do. On long threads, it can also drop parts of the earlier context sooner than Claude would.

The tool also has less community material and fewer ready-made product prompts, so you may spend more time experimenting to get what you want. Multimodal analysis can misread charts or skip small details in screenshots, so you still need to check anything that drives a major call. Right now it fits best as a real-time research and visual analysis helper, not as your only deep strategy partner.

The Real Comparison: Matching AI Tools to Product Strategy Tasks

Once you step back from brand names, the only comparison that matters is how each model fits into specific product strategy tasks. This is where most ChatGPT vs. Claude vs. Gemini comparison threads go wrong. They chase a single winner instead of a clear map of which tool helps with which part of the job.

A simple way to think about AI for product strategy is to break your work into discovery, planning, execution, and analysis. Then ask which model gives you the best signal for each slice, given your budget and habits. In practice, most strong teams use at least two tools and pick one as the default in each area.

- Discovery and research work well when you blend broad and deep tools. For high-level market research and trend checks, ChatGPT and Gemini both do fine, with Gemini having the edge when very recent data matters. When you need to mine customer interviews, support logs, or survey comments, Claude does a better job of holding the whole dataset in its head and surfacing themes with quotes. For competitive analysis tear-down work that includes pricing screenshots and landing pages, Gemini has a slight edge thanks to multimodal input, with Claude as a strong follow-up for long written reviews and RFPs.

- Planning and prioritization lean heavily on structured thinking. Claude tends to give the clearest output when you ask it to build RICE tables, opportunity trees, or other planning frameworks, and it explains trade-offs in a way that holds up in leadership meetings. When you only need skeleton templates for roadmaps or OKR drafts, ChatGPT is more than enough and feels faster. For hard trade-off calls, such as choosing between a growth bet and a reliability fix, Claude again provides more nuance than either ChatGPT or Gemini.

- Execution work favors tools that write quickly in your existing format. ChatGPT is excellent at spitting out user stories, PRD drafts, release notes, and internal memos once you show it a few examples from your team. Claude is better when you want to check those artifacts for gaps, conflicting requirements, or missed edge cases because it can hold the broader context of your product and strategy. Gemini plays a smaller role here, aside from cases where you want to read off charts or dashboards inside Sheets while you write specs.

- Analysis and measurement bring you back to research-style work. Claude is the strongest choice for synthesizing large volumes of user experience across NPS comments, support tickets, and interview notes, since it can keep a very long thread alive. ChatGPT still wins when you just want a quick read on sentiment from a small sample, for example pasting ten reviews and asking for a pattern summary. Gemini stands out whenever charts, funnels, or heatmaps enter the picture because it can read and explain visual data in a single pass while also pulling in fresh market context.

For most teams, a simple stack works well. ChatGPT Plus together with free Gemini gives broad coverage for brainstorming, drafting, and live research. You add Claude Pro on top only if you regularly handle long research packs or need its deeper reasoning for heavy trade-off work. The model mix should follow your calendar and pain points, not the other way around.

Why Your Data Strategy Matters More Than Your AI Choice

A lot of debate around ChatGPT, Claude, and Gemini misses the point. People treat the model as the moat. In practice, these models keep improving and keep getting cheaper, which means your competitors can buy the same power that you can. The real advantage comes from the data and insight you feed into them, plus the product sense that acts on the output.

All three tools train on wide public data, so if you only ask vague questions you will get vague answers. Ask any of them to help you prioritize features for a random B2B SaaS product and you will see the same safe advice about talking to customers and shipping faster. The picture changes when you bring your own research, analytics, and context. A prompt that includes churn by segment, revenue per cohort, and actual interview quotes gives the model something sharp to work with, and the guidance moves from generic to specific.

W. Edwards Deming put it simply: “In God we trust; all others must bring data.”

That does not mean you should paste every sensitive metric into a public web chat. Feeding raw customer lists, revenue figures, or unreleased roadmap items into shared tools can leak information in ways that are difficult to reverse. Many larger teams use enterprise plans or local AI tools when they want AI for product strategy that touches confidential data. Ruhani has seen the difference across more than ninety product audits. Teams with clean data and strong product thinking get faster and clearer outcomes from AI opportunities. Teams with fuzzy goals and messy tracking just create bad strategy decks more quickly.

How Ruhani Rabin Uses AI for Product Strategy (And Where Humans Still Win)

Ruhani does not treat any of these tools as magic. He treats them as sharp, narrow assistants that plug into a clear consulting workflow. That workflow mirrors what he does as a strategic product consultant, AI implementation partner, and fractional CPO for SaaS and WordPress companies.

- Discovery starts with understanding the client’s own data. Claude analyzes user research, support conversations, and usage notes to highlight three to five core pain points and the jobs behind them. Ruhani reads both the raw material and Claude’s summary, then adjusts labels and groupings based on his experience. The model does the heavy lifting on pattern spotting while he makes the final calls.

- Competitive research then broadens the view. Gemini pulls recent product releases, pricing changes, and public statements from direct and near competitors. It also reads screenshots from marketing sites and pricing pages, so there is no need for manual retyping of tiers or features. This gives a faster, clearer picture of where the client’s product stands in its market.

- Strategy framing comes next. Claude maps pain points into Jobs To Be Done, value props, and prioritization grids that line up with revenue and retention goals. ChatGPT helps turn that structure into readable documents, slide outlines, and draft roadmaps that land well with founders, engineers, and go-to-market teams. At this stage Ruhani picks what to keep and what to drop, so the final direction still reflects real-world constraints and culture.

- Validation closes the loop. Before any AI-assisted strategy reaches a client, Ruhani checks it against his experience and what he heard firsthand in calls. If something feels off, he adjusts the logic, rewrites sections, or reruns a step with tighter prompts. In many projects, AI flags that onboarding feels slow, while his direct observation shows the true issue is cognitive overload from a dozen steps on the first screen, which calls for UX best practices redesign rather than only speed work.

Across this workflow, AI saves hours on text-heavy tasks and competitive research, yet humans still own empathy, trade-offs, and ethics. No model can look a founder in the eye and push back on a risky feature bet or weigh the moral cost of aggressive automation in support. Ruhani uses AI to clear the noise so he can spend more time on those high-value conversations.

Avoiding the “AI for AI’s Sake” Trap in Product Strategy

The fastest way to waste time with AI is to start from the tool instead of the problem. Many teams say they want AI for product strategy because a board member asked about it or a competitor bragged on LinkedIn. They rush to plug models into their process without writing down what hurts or how they will measure improvement.

You can spot this trap from a few patterns:

- People spend more time crafting fancy prompts than they save in work.

- Strategy docs filled with AI text sit untouched because they do not match how the team actually plans or reviews work.

- Leaders point at AI output as the reason for big bets even when their gut says the choice does not feel right.

Peter Drucker warned, “There is nothing so useless as doing efficiently that which should not be done at all.” That applies directly to AI experiments that ignore real product problems.

Ruhani uses a simple check before bringing in any model for a new task:

- Write one sentence that describes the current process and pain, for example manual review of forty interviews that eats eight hours.

- Define what better would mean in numbers, such as cutting that effort to two hours while keeping the same insight quality.

- Run a small side-by-side test where you compare human-only work with AI-assisted work before you trust the new flow at scale.

Ruhani has a hard automation ROI here. If you cannot explain which problem AI will solve in one clear sentence, you are not ready to use it for that part of product strategy. Tool choice should follow pain, not noise from peers or vendors.

Conclusion

When you strip away branding, the core message is simple. The real question is not which AI is best in some general sense, but which one fits the product strategy task in front of you. ChatGPT, Claude, and Gemini each carry different strengths, and smart teams match those strengths to discovery, planning, execution, or analysis rather than trying to crown a single champion.

- ChatGPT works well as your fast generalist. It is the go-to for brainstorming, templates, short summaries, and broad research that starts from zero knowledge. Most founders and product managers can justify its cost on time savings from drafting alone.

- Claude fits deeper analysis. It shines when you handle long research packs, complex trade-offs, and strategy frameworks that need careful reasoning and long memory. If your week is filled with documents and hard prioritization debates, it deserves a spot in your stack.

- Gemini adds strength on live data and visuals. Its ties to Google tools and multimodal input make it a strong pick for competitive review, pricing analysis, and reading charts or funnels. Even the free tier can give your strategy work a clear boost when used in the right places.

Above all, clean data and sound product thinking matter more than which model you subscribe to. AI makes good strategies faster and bad ones more dangerous. Pick one painful step in your current process, try one tool against it for a few weeks, and measure the time saved and clarity gained. Then keep what works and leave the rest.

FAQs

Below are blunt answers to questions product leaders ask often.

Which AI Should I Use If I Can Only Afford One Subscription?

If you can only pay for one, start with ChatGPT Plus. For about twenty dollars each month you get strong help for brainstorming, PRD drafts, and general research, which covers much of the product work. Use free Gemini when you need live web data or screenshot checks. Add Claude Pro later only if long documents and hard trade-off analysis are regular parts of your week.

Can I Use Free Versions of These AIs for Product Strategy Work?

Yes, free tiers work for many tasks. ChatGPT Free with GPT-3.5 is fine for light brainstorming, summaries, and small prompts, though it forgets context faster and makes more errors than GPT-4. Gemini Free can handle most research and screenshot work, but it may be slower at busy times. Claude Free is strong but has tight message limits, so save it for your most important questions.

Is It Safe To Input Confidential Product Strategy Data Into These AIs?

Treat public web versions of these tools as places where you never paste sensitive data. Keep customer names, revenue figures, and unreleased features out of the prompt. If you must work with private information, look at enterprise plans that switch off training on your data and set clear limits on use. For big cases, open-source models such as Llama or Mistral on your own stack give more control, though they need extra setup.

How Do I Know If AI-Generated Strategy Insights Are Accurate?

Treat AI like a bright but very junior analyst, not a final decision maker. Never ship a strategy or roadmap change based only on its text. Cross-check numbers and research claims against primary sources, and test whether the logic matches your own domain knowledge. If nobody on your team can explain where a big claim came from or point to a source, you should not use it to guide product bets.

Will AI Replace Product Strategists or CPOs?

AI changes the tools product leaders use, but it does not replace the role. Models can scan data, spot patterns, and draft options faster than people, yet they still cannot sit with a customer, manage politics, or carry real accountability for bets. Strong leaders use AI to clear low-level work so they can spend more time on vision, trade-offs, and coaching. Those skills stay human.